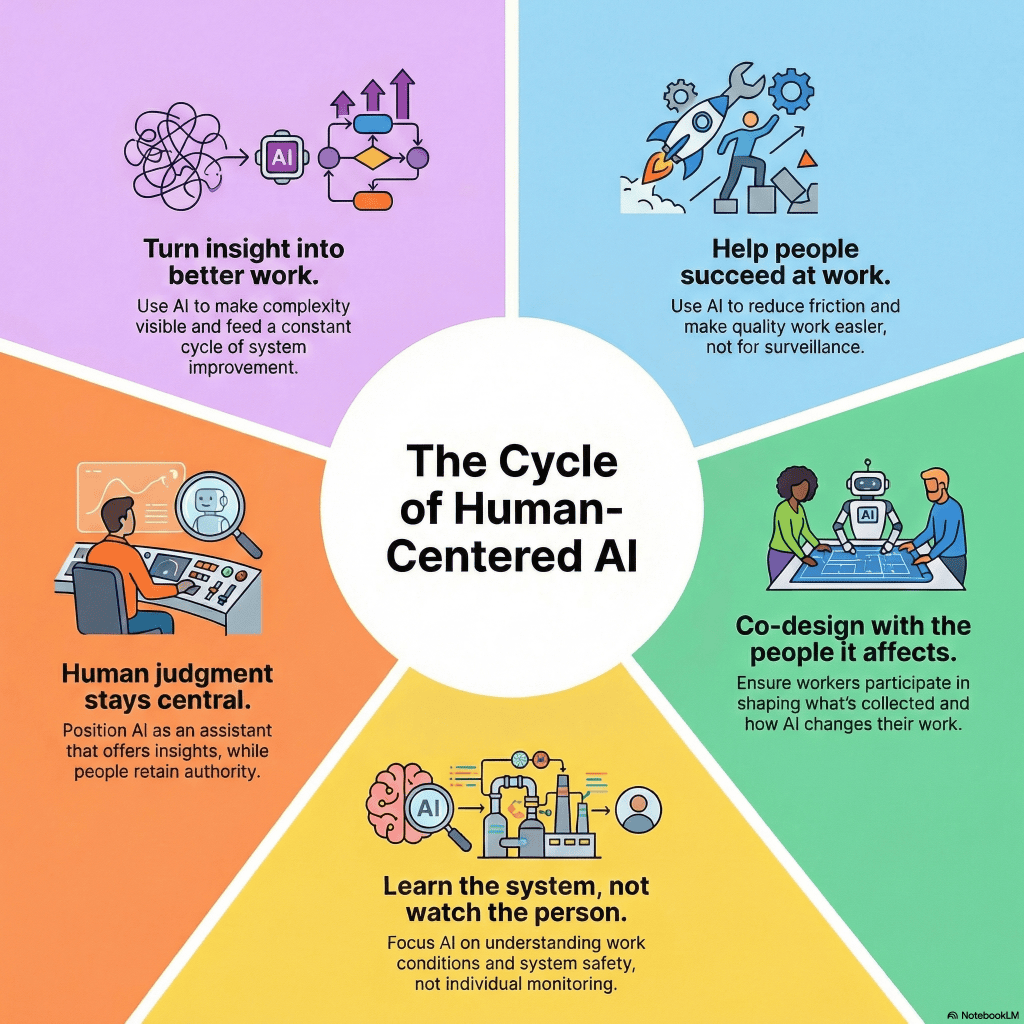

What does a human-centered approach to using AI for HOP and Operational Learning look like.

Use Al to reduce friction and

make quality work easier,

not for surveillance.

Design and use AI with both an organizational and worker-benefit purpose: reducing friction, making it easier to do quality and safe work, strengthening coordination, and improving systems. If the primary value is “surveillance, rank, detect, determine, enforce,” it’s not a human-centered use case.

Ensure workers participate in

shaping what's collected and

how Al changes their work.

Ethical use means workers participate in shaping what’s collected, how it’s interpreted, and how it changes work. People deserve clarity and agency: what data is used, why, how long it’s kept, and how decisions are made.

Focus on understanding work

conditions and system safety,

not individual monitoring.

Ethical and human-centered AI focuses on system conditions and how work is done every day by understanding work demands, system capacity and sensitivity, tool reliability, safeguard/mitigation strength, learning capacity, and physiological safety, not individual monitoring. If AI increases fear, self-protection, or hiding, it will reduce learning and impact work.

Position Al as an assistant

that offers insights, while

people retain authority.

AI should be an assistant that offers insights, patterns, and prompts to better support understanding, curiosity, and building knowledge. People retain the authority to interpret context and challenge outputs. This keeps responsibility where it belongs and prevents “automation bias.”

Use Al to make complexity

visible and feed a constant

cycle of system improvement.

The ethical payoff is not detection; it’s making complexity more visible, supporting system improvement, and building knowledge and understanding from leaders to the frontline. AI use should feed learning cycles: identify friction, test changes, strengthen controls, and support whether things genuinely improve work.